Prompt Engineering is a 21st Century Skill

This post originally appeared on LinkedIn in January 2023.

"prompt engineering" is a rapidly emerging skill for 21st century professionals - not just just for product managers.

here are a few things i learned getting OpenAI ChatGPT to feel like a cheat code while helping a friend wordmsith his career pivot.

tl;dr

- broad input + "make something up" = fluff & regurgitation

- specific example + "regenerate this" = cheat codes

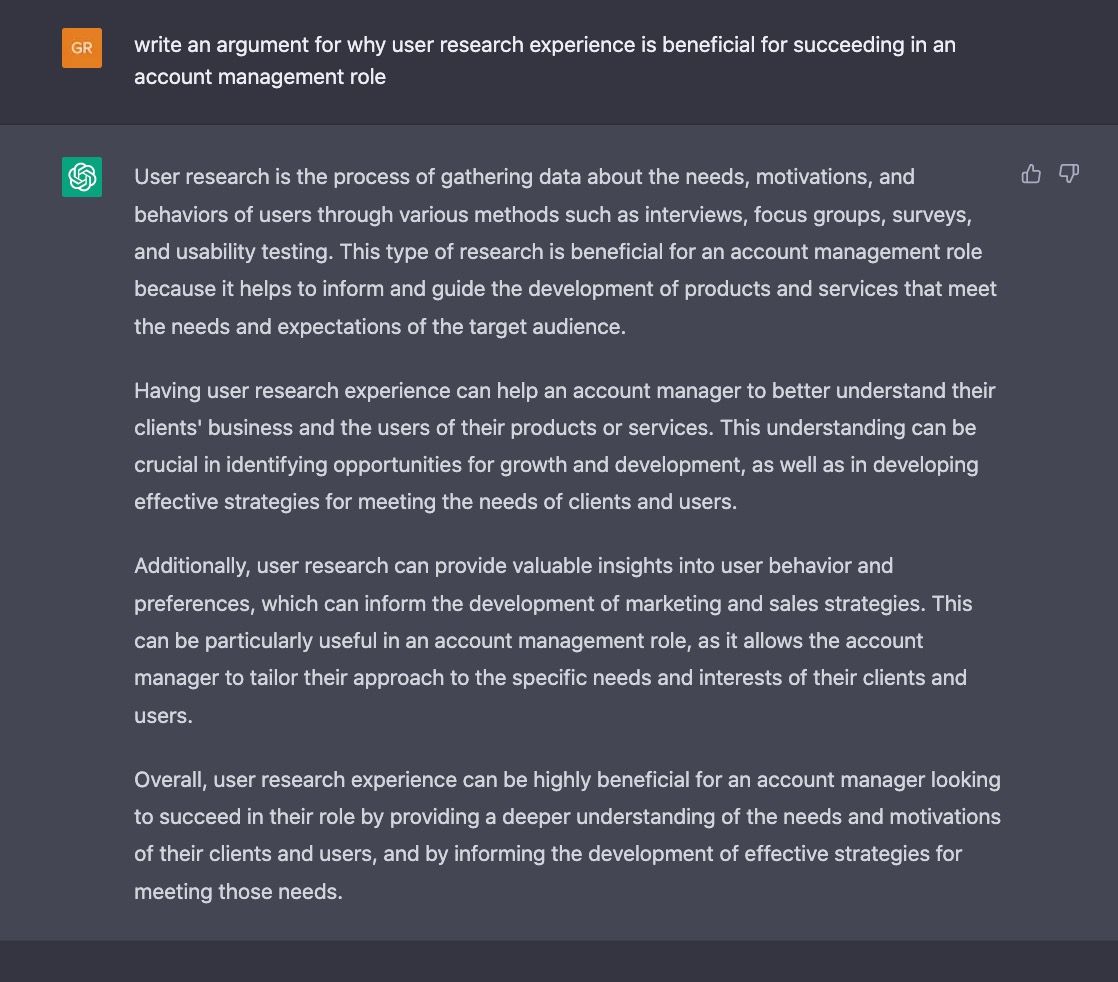

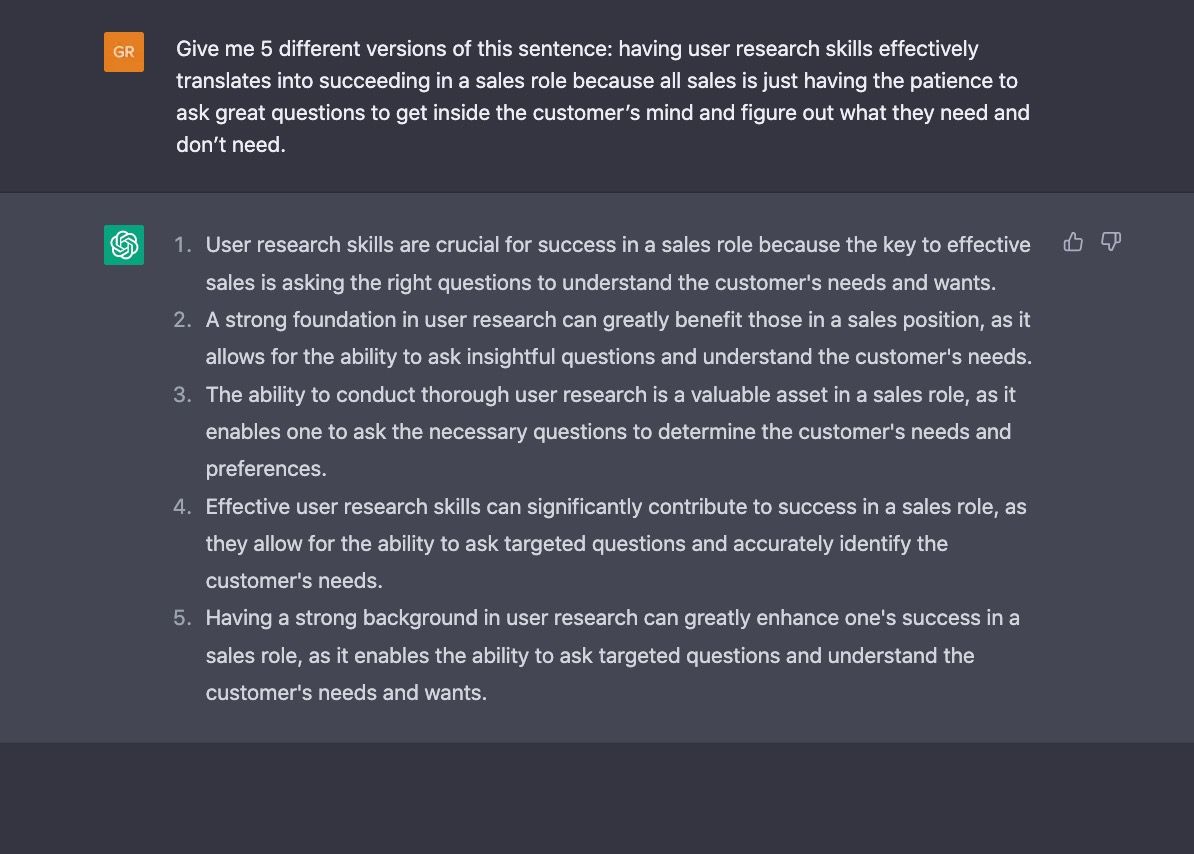

same AI. same goal. yet the two images below show vastly different results.

the key difference: how we "engineered" the prompts.

prompt design 101: specificity

the trick to helping AI like ChatGPT feel like a cheat code is specificity.

i told my friend to make 2 tweaks to his prompts:

1. provide a more specific input - e.g. an example sentence

2. request a more specific output - e.g. renegerate 5 versions

steer LLMs by giving them "concept handles"

broadly speaking, generative AI usually doesn't excel at making stuff up from scratch - especially when it doesn't have much to "hold on" to.

if you don't give the AI much to work with it will give you replica of something from its training data - in ChatGPT's case, it just regurgitates a bunch of filler text it learned from the internet.

but when your prompt includes a detailed input - like 1-2 example sentences - appended to a *regenerate* request, then you are giving the AI a lot of "concept handles" to work with - which can lead to outputs that feel like magic.

i'm building a library of prompt techniques, so expect more shortcuts to follow.